Lets starts with some introductory part, Docker, represented by a logo with a friendly Whale....ha ha ha you are reading it correctly....I said "Friendly Whale"...joke aside, Docker is nothing but an open source project that gives an ease to deploy an software application in an software container. Talking about its basic functionality which is nothing but, resource isolation.....with a user-friendly API...

Connecting with Analogy........

The basic idea behind docker, it could be explained using simple analogy. Let us consider Transport industry, let us think that goods in the goods industry have to be transported by different means like domestic-lifts, trucks, cranes, ships etc. These goods comes in different size, shapes and have different storing requirements. Traditionally and if talk more about historically, it was a painful process which fully depends on manual intervention on each and every transit points for loading and unloading.

It all changed with an sudden evolution in the good transport industry with an introduction to Intermodal Containers, intermodal containers are nothing but a large standardized container, designed and built for freight transport, I mean these types of container can be used over different modes of transport, from ship to rail to truck, without loading and reloading of their cargo......As they come in standard size, and are manufactured with transportation in mind, all the relevant machineries can be designed with minimal human and lets say manual intervention.......The additional benefit of packed container is that they prevent the internal environment like temperature, humidity for sensitive goods.

As a result, the transportation industry can stop worrying about loading--unloading--reloading. And here is where DOCKER comes in and brings similar advantages to Software industry.

Virtual Machines VS Docker........????

Let us have a quick glance, on how Virtual Machines are different from Docker container, because it is very important to understand the thin line difference between the both, this difference will become apparent when you take a look on the following diagram.........

Applications are running in VM, requires full instance of the OS and required supporting libs. Containers, on the other hand, shares the OS with host. Hypervisor is comparable to the container engine (represented as Docker on the image)in a sense that it manages the life cycle of the containers. The main difference between the both is, processes running in the container are just like native processes on the host....so no extra overhead associated with hypervisor execution. Even in the case of containers applications can reuse the libraries and data sharing between the container is also possible.

How you ever wonder that what is hypervisor...????

A hypervisor, also known as a virtual machine monitor or VMM, is software that creates and runs virtual machines (VMs). A hypervisor allows one host computer to support multiple guest VMs by virtually sharing its resources, such as memory and processing.

Why to use Hypervisor??????

Because of multiple VMs can be configured using Hypervisor, it reduces:

Space

Energy

Maintenance Requirement

How Hypervisor works??????

Hypervisors support the creation and management of virtual machines (VMs) by abstracting a computer’s software from its hardware. Hypervisors make virtualization possible by translating requests between the physical and virtual resources. Bare-metal hypervisors are sometimes embedded into the firmware at the same level as the motherboard basic input/output system (BIOS) to enable the operating system on a computer to access and use virtualization software.

Containers vs Hypervisor........

Hypervisor....

- Allow an operating system to run independently from the underlying hardware through the use of virtual machines.

- Share virtual computing, storage and memory resources.

- Can run multiple operating systems on top of one server.

Container.....

- Allow applications to run independently of an operating system.

- Can run on any operating system—all they need is a container engine to run.

- Are extremely portable since in a container, an application has everything it needs to run.

Docker Architecture.................

Before going directly to docker architecture, let us first understand what is Docker Engine, what is its functionality and what are its components.

Below figure illustrates, Docker Engine, try to have a look on it...

Docker Engine is nothing but Build once, run anywhere property engine,major functionality of Docker engine includes:

- Container Management.

- Image Management.

- Network Management.

- Data Volume Management.

Major Components Of Docker Engine:

- Docker Daemon: It is an persistent background process that manages docker images and docker containers, with parallel management of storage volumes and networks. Docker Daemon also deals wit pulling and building of images on user/client request.

- REST API: It is nothing but web service,used by end users or clients to interact with Docker Daemon.

- Docker CLI: Docker Command Line Interface or UI (User Interface), is an user interface through which user or client interacts with Docker Services.

After understanding Docker Engine, let us move the core part that is nothing but Docker Architecture.....

You can clearly observe that on the top of the docker architecture, there are registry...By default, the main registry is the Docker Hub which hosts public and official images like Linux, Oracle, Docker..etc... User or Client or Organizations can also hosts their private registries for their private images they want to work with.

On the right-hand side we have images and containers, Images can be downloaded from registry explicitly (using command docker pull imageName)or implicitly on starting the container.Once image is downloaded, it will be cached locally.

Container are nothing but instances of images, you can have multiple containers running for the images depending upon the task and requirements. Container can be treated as living things.

At the center, there is the heart of Docker Architecture, i,e, nothing but Docker Daemon, responsible for creating, running and monitoring the containers. It also takes care of building and storing the images.

Finally, on the left hand side there is Docker Client, through which user or client interacts with Docker Daemon using HTTP or Unix Socket....Unix socket are used when application and container is running on the same machine, but for the remote management (explicit image call), HTTP based API (Usually web services , REST) is required and is generally used.

Towards Installing Docker............

I am documenting, installation part natively for Linux system, but that doesn't means that docker will not support other OS, docker architecture supports various OS like Linux, Windows (needs to enable HyperV layer) , Mac OS etc.......Here I am discussing about installation of docker on Linux system.

So depending upon the target distribution it could be easy as sudo apt-get install docker.io

Normally in Linux, you prepend the Docker commands with sudo .

As the Docker daemon (Docker Architecture's core part) uses Linux-specific kernel features, thats why it is required to enable HyperV as it isn't possible to run Docker natively in Mac OS or Windows.

Using Docker: Towards Application.......

Let us take an example....

docker run phusion/baseimage echo "Hello Users,Rushi welcomes you to blog."

Expected Output:

Hello Users,Rushi welcomes you to blog.

Looking Back-end process.......

- The image is first downloaded from Docker Hub ( if it is not available in local cache), and it will be stored locally.

- A container based on this images will be started.

- The command echo will be executed into the container (towards providing isolation).

- The Container will be stopped on execution of echo command.

On running above container, you may notice a delay before text is printed on the screen, this due to requirement of downloading image from central repo, if has been cached locally, everything would have taken a fraction of seconds to execute.

Details regarding last executed container, you can retrieve using command:

docker ps -l:

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

af14bec37930 phusion/baseimage:latest "echo 'Hello Users 3 minutes ago Exited (0) 3 seconds ago Rushi_Trivedi

Diving to next example......

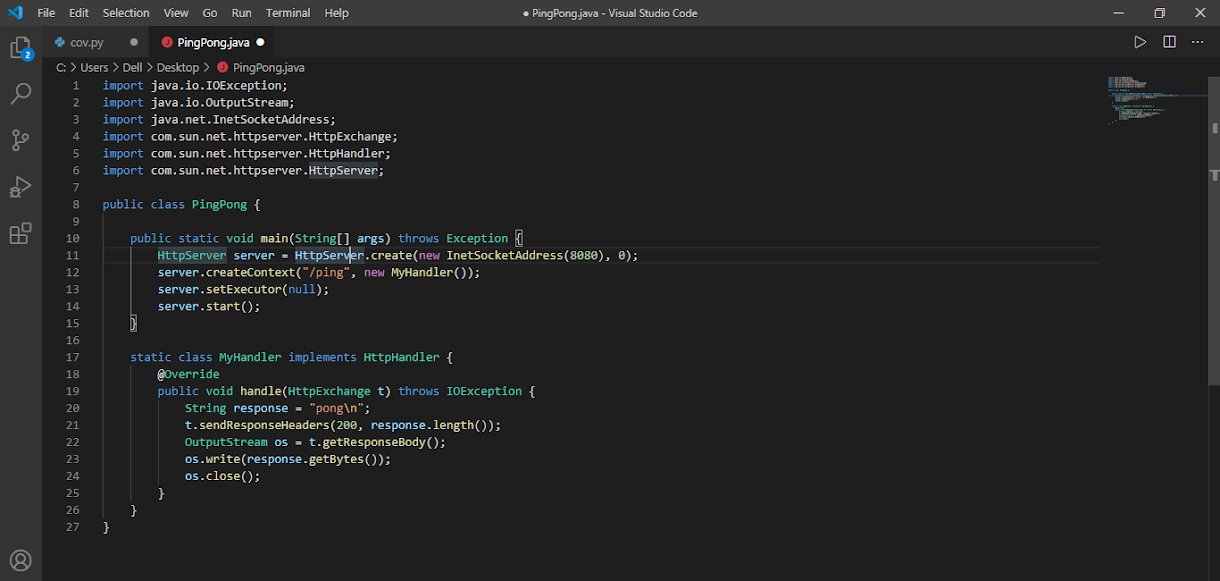

Eventually you can tell that running an simple command is as simple to run the same directly on the standard terminal....Let us take an more practical use case, now we will deploy an simple web server application using Docker. Let us take an example of simple Java program that handles HTTP GET request to '/ping' and responds with the string 'pong\n'.......

Creating Docker File...........................

Before diving in and starting building Docker image, its good practice to have a check on exisiting image in the Docker Hub or in any private accessible repository.

To build an image, first we need to decide which base image we are going to use. The base image is being denoted by FROM instruction. Here for the sake of ease we are using official Java 8 image as base image, we will pull the Java 8 image from docker hub, for this we are going to copy Java 8 image into our Java file by using a COPY instruction.

Next thing we are going to compile it using RUN instruction,EXPOSE instruction denotes that image(s) will be providing its service on a particular port. ENTRYPOINT is an instruction that we can executed whenever container based on the image is being started and CMD indicates the default parameters we are going to pass to it.

FROM java:8

COPY PingPong.java /

RUN javac PingPong.java

EXPOSE 8080

ENTRYPOINT ["java"] CMD ["PingPong"]

After saving these instructions in a file called “Dockerfile”, we can build the corresponding Docker image by executing:

docker build -t toptal/pingpong .

Running Container................

After building the image, we can have our own live container, there are several ways to achieve the same, lets start with simple one....

docker run -d -p 8080:8080 toptal/pingpong

where -p [port-on-the-host]:[port-in-the-container] denotes the ports mapping on the host and the container respectively. Furthermore, we are telling Docker to run the container as a daemon process in the background by specifying -d. You can test if the web server application is running by attempting to access ‘http://localhost:8080/ping’.

Hurrayyyyyyyyyyyyyyyyyyyyyy....our first Docker container is alive and swimming......We could also start the container in an interactive mode -i -t. In our case, we will override the entrypoint command so we are presented with a bash terminal. Now we can execute whatever commands we want, but exiting the container will stop it:

docker run -i -t --entrypoint="bash" toptal/pingpong

There are many options that can be used in the starting up the containers, lets cover some more......

For example, if we want to persist data outside the container, we could share the host filesystem with the container using -v..... By default the access mode is read-write,Volumes are particularly important when we need to use any security information like credentials and private keys inside of the containers, which shouldn’t be stored on the image. Additionally, it could also prevent the duplication of data, for example by mapping your local Maven repository to the container to save you from downloading the Internet twice.

Docker also has the capability of linking containers together. Linked containers can talk to each other even if none of the ports are exposed. It can be achieved with –link other-container-name.

Other Container and Image Options......

- stop – Stops a running container.

- start – Starts a stopped container.

- commit – Creates a new image from a container’s changes.

- rm – Removes one or more containers.

- rmi – Removes one or more images.

- ps – Lists containers.

- images – Lists images.

- exec – Runs a command in a running container.

Last command could be particularly useful for debugging purposes, as it lets you to connect to a terminal of a running container:

docker exec -i -t <container-id> bash

Docker Compose for the MicroService World............

If you have more than just a couple of interconnected containers, it makes sense to use a tool like docker-compose. In a configuration file, you describe how to start the containers and how they should be linked with each other. Irrespective of the amount of containers involved and their dependencies, you could have all of them up and running with one command: docker-compose up.

Docker towards Project Life Cycle Management...................

Docker helps you keep your local development environment clean. Instead of having multiple versions of different services installed such as Java, Kafka, Spark, Cassandra, etc., you can just start and stop a required container when necessary. You can take things a step further and run multiple software stacks side by side avoiding the mix-up of dependency versions.

With Docker, you can save time, effort, and money. If your project is very complex to set up, “dockerise” it. Go through the pain of creating a Docker image once, and from this point everyone can just start a container in a snap.

You can also have an “integration environment” running locally (or on CI) and replace stubs with real services running in Docker containers.

Testing / Continuous Integration

With Dockerfile, it is easy to achieve reproducible builds. Jenkins or other CI solutions can be configured to create a Docker image for every build. You could store some or all images in a private Docker registry for future reference.

With Docker, you only test what needs to be tested and take environment out of the equation. Performing tests on a running container can help keep things much more predictable.

Another interesting feature of having software containers is that it is easy to spin out slave machines with the identical development setup. It can be particularly useful for load testing of clustered deployments.

Production

Docker can be a common interface between developers and operations personnel, eliminating a potential source of friction. It also encourages the same image/binaries to be used at every step of the pipeline. Moreover, being able to deploy fully tested container without environment differences help to ensure that no errors are introduced in the build process.

You can seamlessly migrate applications into production. Something that was once a tedious and flaky process can now be as simple as:

docker stop container-id; docker run new-image

And if something goes wrong when deploying a new version, you can always quickly roll-back or change to other container:

docker stop container-id; docker start other-container-id

… guaranteed not to leave any mess behind or leave things in an inconsistent state.

Summary

- Build – Docker allows you to compose your application from microservices, without worrying about inconsistencies between development and production environments, and without locking into any platform or language.

- Ship – Docker lets you design the entire cycle of application development, testing, and distribution, and manage it with a consistent user interface.

- Run – Docker offers you the ability to deploy scalable services securely and reliably on a wide variety of platforms.

Finally, have a fun swimming with whales..........................

Comments

Post a Comment